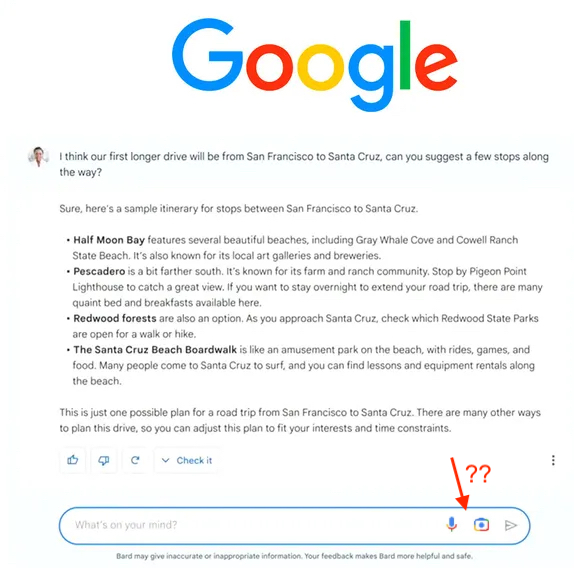

In April 2022, Google introduced multisearch, a new way of searching that enables people to search using text and images at the same time. This feature allowed users to find what they were looking for, even if they didn’t have all the words to describe it. Multisearch in Lens is particularly useful for visual needs, including style and home decor questions for example. Users could search by color, brand, or a visual attribute, and by swiping up and tapping the “+ Add to your search” button, they could add text to the search. With multisearch in Lens, users can go beyond the search box and ask questions about what they see, making it easier to find what they were looking for.

Multisearch in Google Lens

Imagine that you are browsing online and you come across a yellow dress that you like, but you really want it in green. You simply tap the Google Lens icon to highlight the yellow dress, and then add the word “green” to the search. Multisearch will now show you images of green dresses that are similar in style to the yellow one you liked.

As we fast forward to February 2023, Google has been working on making visual search even more natural and intuitive by taking advantage of its next generation of AI-powered technology. Google will add a “search your screen” functionality to Lens in the upcoming months, letting users search what’s on their mobile screens across websites and apps. We think this multisearch / visual search functionality of Google Lens will be implemented in Google Bard.

Visual Search in Google Bard?

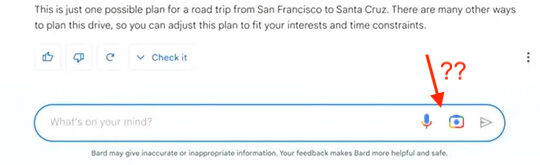

As we look ahead it seems that Google Bard could potentially incorporate the multisearch and visual search features in a ChatGPT-like manner. With Bard’s language and conversation capabilities powered by Google’s Language Model for Dialogue Applications (LaMDA), the AI service could leverage the power of multisearch to provide more accurate and personalized responses to user queries.

For example, users could potentially ask Bard a question such as “Can you show me a picture of a blue coffee mug?” and then follow up with a query like “Do you know where I can buy one in yellow?” Bard could then use visual search to identify similar mugs and provide links to shopping websites that sell them. Similarly, users could show a picture of their dining set and ask Bard for suggestions on coffee tables with a similar design. We wouldn’t be surprised if we will have a ‘camera’ icon in the Google Bard’s chat interface in which you can add an image, accompanied with a chat query.

This ChatGPT-like interaction with Bard could potentially make it easier for users to find what they are looking for, especially when it comes to fashion products and home decor. The combination of language and visual cues could provide more context and allow Bard to provide more accurate and relevant responses. We wouldn’t be surprised if we would see the following icons in a future version of Google Bard:

It’s important to note that the exact features and capabilities of Bard have not yet been fully revealed, and it remains to be seen how Google will integrate multisearch and visual search into the AI service. Nonetheless, it’s clear that Google is heavily invested in search with AI, and the future of AI technology seems to be heading towards the so-called “multimodal” capabilities anyway.

Multisearch goes beyond text and images

Multimodal AI is a type of artificial intelligence that uses multiple modes of input, such as text, images, and sound, to provide a more comprehensive understanding of a given task or problem. So looking ahead it is somewhat expected that integrating multimodal algorithms into search has the potential to take conversational AI to an even higher level. These algorithms could potentially enable users in the future to search for products or information using a combination of voice commands, text, image and video.

The next iteration of OpenAI’s GPT model, GPT-4, is rumored to have multimodal features, including the ability to function in multiple modes such as text, images, and sounds. This represents a major step forward on the current ChatGPT and could have significant implications for the way we interact with AI in general. With Microsoft’s investment in OpenAI and its use of the GPT model in the Bing’s chat AI Sydney, Google will definitely need to stay up to speed with its multisearch capabilities to compete.

Conclusion: search with AI has high potential

The potential for multimodal AI and conversational AI is exciting, and the integration of multisearch and visual search into Google Bard would just be the beginning. However, it’s important to keep in mind that we don’t yet know how these technologies will evolve or how they will ultimately be implemented in Google or Bing. Nonetheless, the integration of multisearch has the potential to transform how we search for information and interact with AI, and the competition among tech companies to innovate in this area is sure to be fierce!

Are you curious to see how the integration of multisearch and visual search will evolve in the upcoming months? Want to know how to prepare your SEO for Google Bard and Microsoft’s Bing AI? Be sure to sign up for our newsletter below and follow us on Twitter or LinkedIn.

FAQs

What is multisearch?

Multisearch is a new way of searching that enables people to search using text and images at the same time. It allows users to find what they are looking for, even if they don’t have all the words to describe it. Multisearch in Google Lens is particularly useful for visual needs, including style and home decor questions.

How is multisearch useful for visual needs?

With multisearch in Lens, users can go beyond the search box and ask questions about what they see, making it easier to find what they were looking for. In the future for example, users could show a picture of their dining set and ask Google Bard for suggestions on coffee tables with a similar design.

What is the “search your screen” functionality in Google Lens?

Will Google Bard incorporate multisearch and visual search features?

It is speculated that Google Bard could potentially incorporate the multisearch and visual search features in a ChatGPT-like manner. With Bard’s language and conversation capabilities powered by Google’s Language Model for Dialogue Applications (LaMDA), the AI service could leverage the power of multisearch to provide more accurate and personalized responses to user queries.

What is multimodal AI?

Multimodal AI is a type of artificial intelligence that uses multiple modes of input, such as text, images, and sound, to provide a more comprehensive understanding of a given task or problem. The next iteration of OpenAI’s GPT model, GPT-4, is rumored to have multimodal features.

What is the potential for search with AI?

The potential for multimodal AI and conversational AI is exciting, and the integration of multisearch and visual search into Google Bard would just be the beginning. The integration of multisearch has the potential to transform how we search for information and interact with AI, and the competition among tech companies to innovate in this area is sure to be fierce!

Is Microsoft's Bing AI going to support Multisearch soon? - Search With AI

[…] of Multisearch into their Google Lens technology. It is speculated that this feature will soon be incorporated into Google Bard, an AI chat assistant slated to be integrated into their SERP. This innovative technology could […]

Featured Snippets SEO = Google Bard SEO? - Search With AI

[…] anticipate that Google Bard will support multisearch like Bing chat, meaning the chat AI will understand images and videos and use them during […]